Abstract

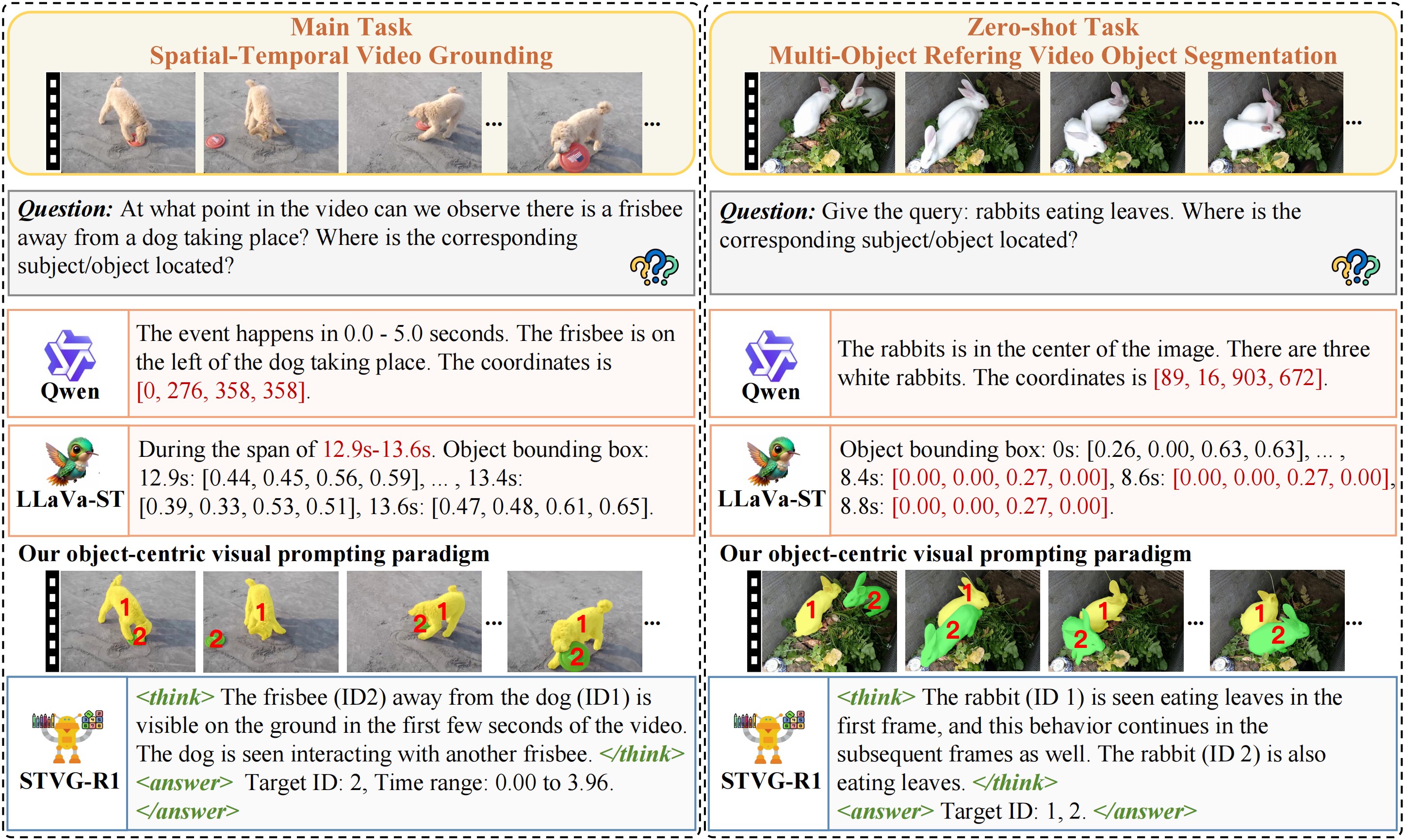

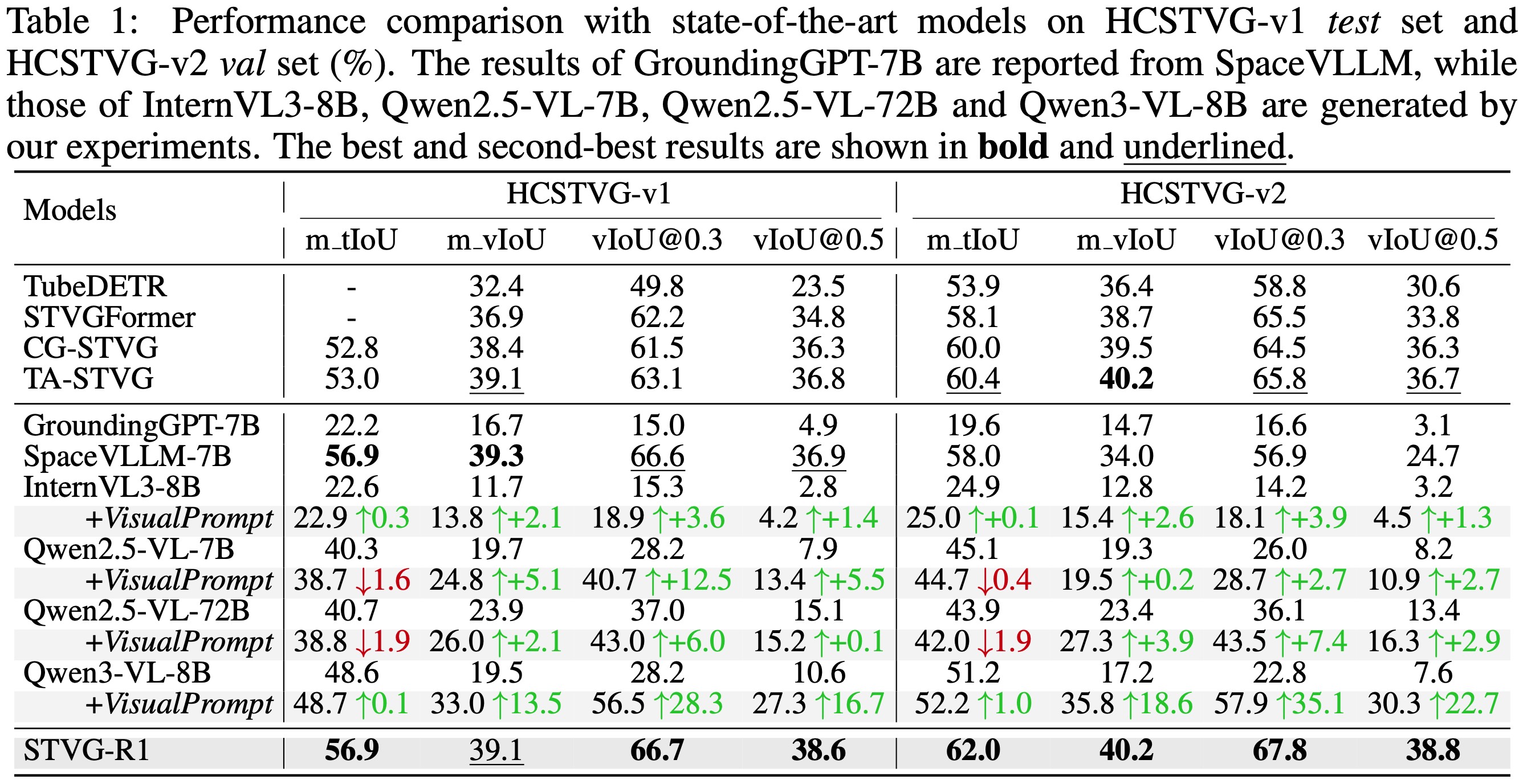

In vision–language models (VLMs), misalignment between textual descriptions and visual coordinates often induces hallucinations. This issue becomes particularly severe in dense prediction tasks such as spatial–temporal video grounding (STVG). Prior approaches typically focus on enhancing visual–textual alignment or attaching auxiliary decoders. However, these strategies inevitably introduce additional trainable modules, leading to significant annotation costs and computational overhead. In this work, we propose a novel visual prompting paradigm that avoids the difficult problem of aligning coordinates across modalities. Specifically, we reformulate per-frame coordinate prediction as a compact instance-level identification problem by assigning each object a unique, temporally consistent ID. These IDs are embedded into the video as visual prompts, providing explicit and interpretable inputs to the VLMs. Furthermore, we introduce STVG-R1, the first reinforcement learning framework for STVG, which employs a task-driven reward to jointly optimize temporal accuracy, spatial consistency, and structural format regularization. Extensive experiments on six benchmarks demonstrate the effectiveness of our approach. STVG-R1 surpasses the baseline Qwen2.5-VL-7B by a remarkable margin of 20.9% on m_IoU on the HCSTVG-v2 benchmark, establishing a new state of the art (SOTA). Surprisingly, STVG-R1 also exhibits strong zero-shot generalization to multi-object referring video object segmentation task, achieving a SOTA 47.3% J&F on MeViS.

Method

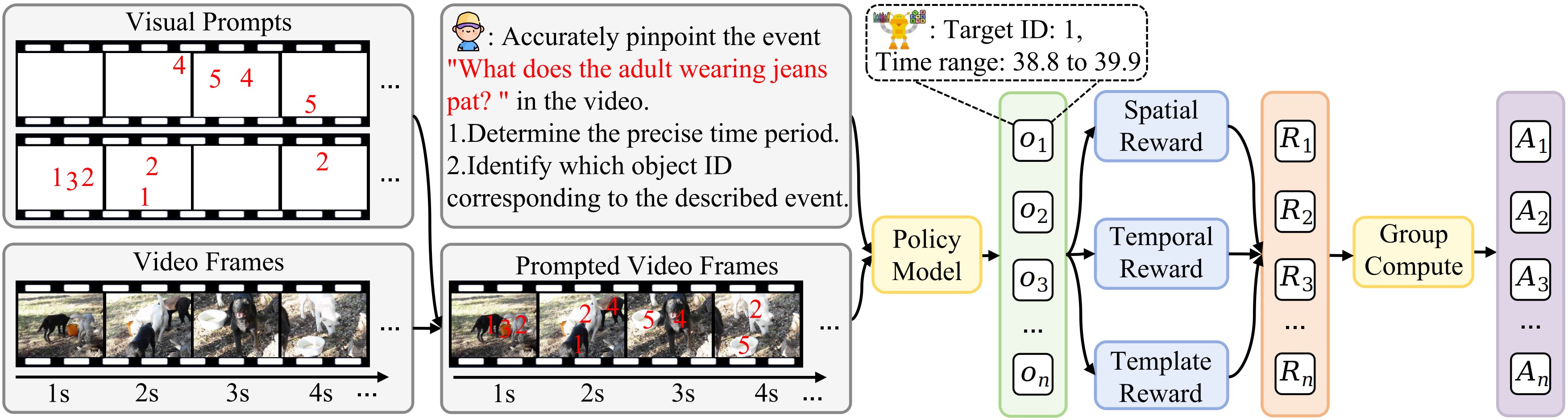

1. Object-Centric Visual Prompting. Each object in the video is assigned a unique, temporally consistent ID rendered as a small visual marker. These IDs transform dense coordinate prediction into a compact instance-level identification task, mitigating visual–textual misalignment and enabling interpretable grounding.

2. Reinforcement Learning with Structured Rewards. We optimize the policy with a task-driven reward that combines temporal accuracy, spatial consistency, and format regularization.

Temporal IoU reward. It quantifies the overlap between the predicted interval \([t_s,t_e]\) and the ground-truth segment \([t'_s,t'_e]\):

\[ r_{t}(o) = \frac{[t_s, t_e] \cap [t'_s, t'_e]}{[t_s, t_e] \cup [t'_s, t'_e]}. \]Here, \(A \cap B\) and \(A \cup B\) denote the intersection and union of intervals \(A\) and \(B\), respectively.

Spatial consistency reward. It verifies whether the predicted object ID is correct and appears within the localized temporal segment:

\[ r_{s}(o) = \begin{cases} 1, & \text{if } \imath = \imath^{*} \text{ and } \imath \text{ appears in } [t_s, t_e],\\ 0, & \text{otherwise.} \end{cases} \]Here, \(\imath\) and \(\imath^{*}\) denote the predicted and ground-truth object ID. This design is consistent with the vIoU metric in STVG, defined as \(\lvert P_u\rvert^{-1}\!\sum_{t\in P_i}\operatorname{IoU}(b_t, b_t^{*})\), where \(P_i\) and \(P_u\) are the intersection and union of the predicted and ground-truth temporal segments, and \(b_t\) and \(b_t^{*}\) are the predicted and ground-truth bounding boxes at frame \(t\).

Format reward \(r_f(o)\). A value of 1 is assigned only if the response encloses the reasoning within <think>...</think> and the final prediction within <answer>...</answer>. Reasoning traces with timestamps and instance IDs provide clearer references and more precise grounding.

Overall reward. The full objective is the sum of the three components:

\[ R(o) = r_{t}(o) + r_{s}(o) + r_{f}(o). \]Performance

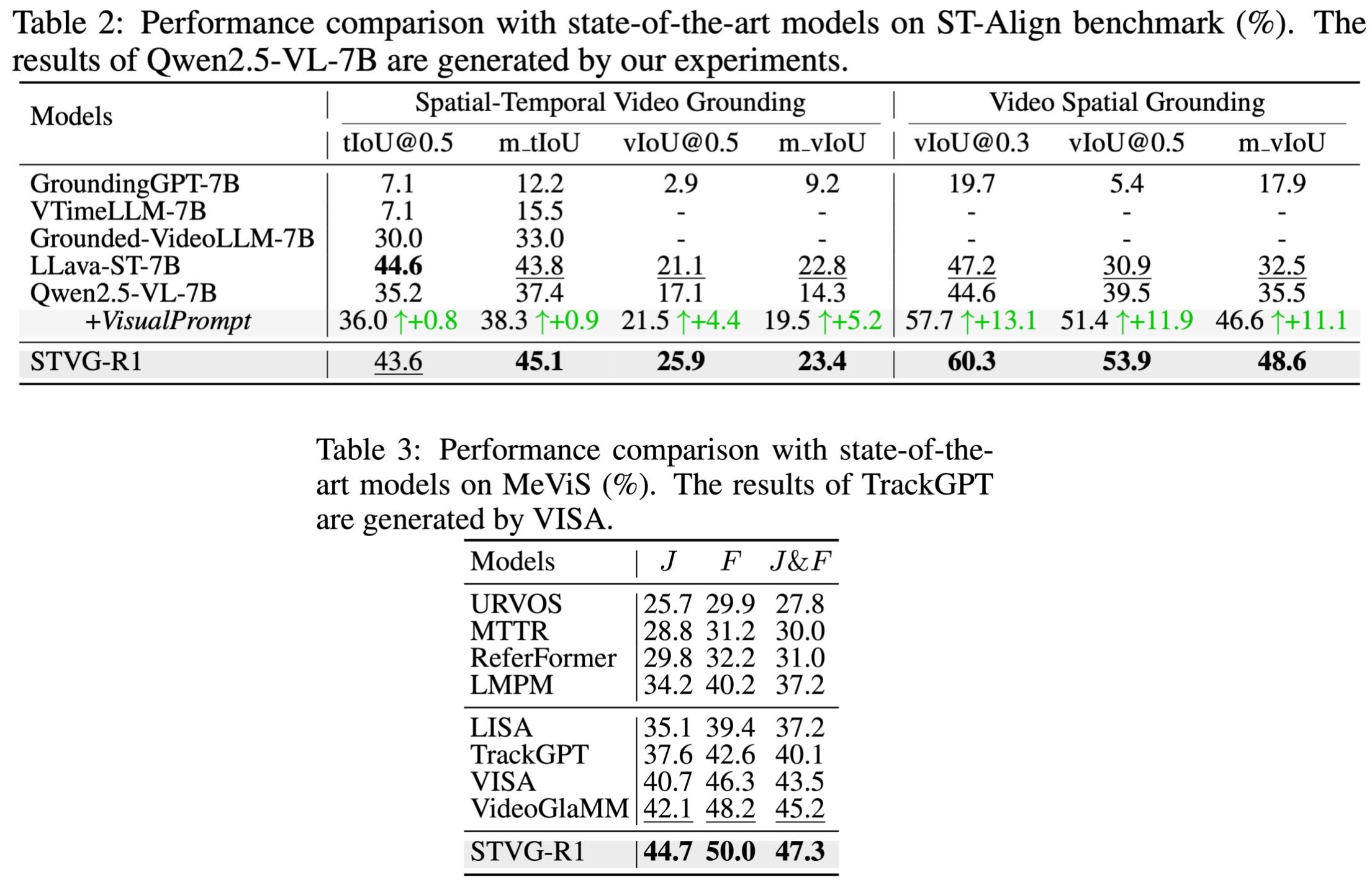

We evaluate STVG-R1 on spatial–temporal video grounding (STVG), spatial video grounding (SVG), temporal video grounding (VTG), and referring video object segmentation (RVOS). The results show that our method consistently surpasses both task-specific dense prediction models and general-purpose vision–language models (VLMs), validating the effectiveness of our object-centric visual prompting paradigm and reinforcement learning framework.

1. STVG Results. STVG-R1 achieves new SOTA results on HCSTVG-v1, HCSTVG-v2, and ST-Align, improving the baseline Qwen2.5-VL-7B by up to +20.9% m_vIoU on HCSTVG-v2. In the zero-shot setting, simply adding the visual prompt on Qwen3-VL-8B brings an additional +18.6% m_vIoU improvement on the HCSTVG-v2.

2. SVG and RVOS Results. In the more challenging MeViS benchmark, STVG-R1 reaches a J&F of 47.3%, showing strong zero-shot generalization to multi-object segmentation tasks, despite being trained only on single-object grounding data.

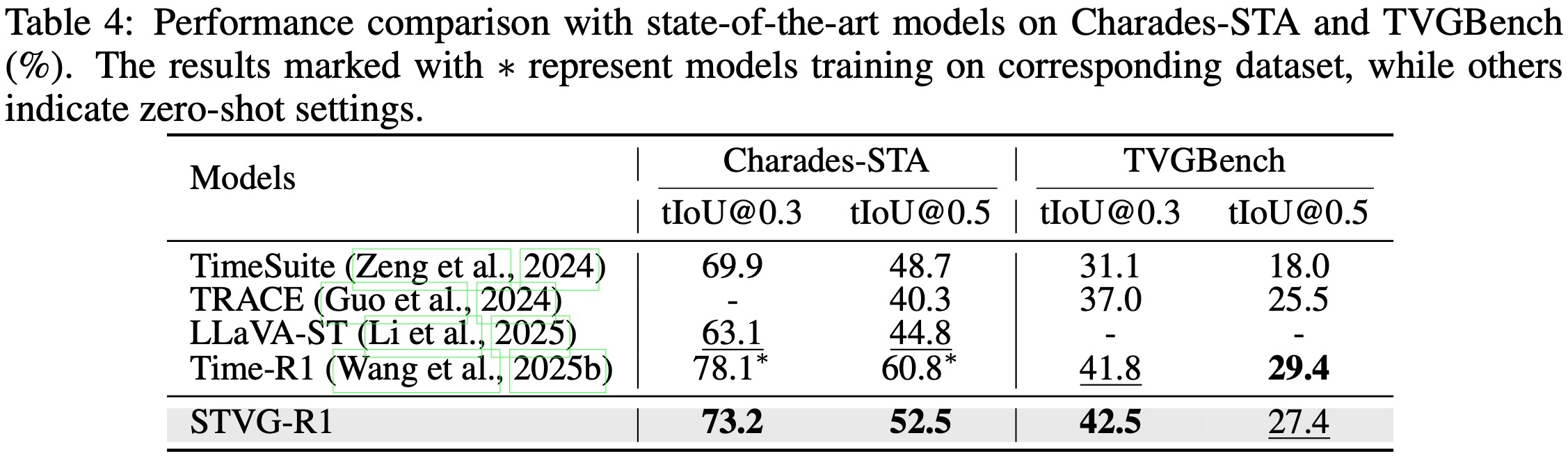

3. VTG Results. For out-of-distribution temporal grounding, STVG-R1 achieves tIoU@0.5 = 52.5% on Charades-STA and competitive tIoU@0.3 = 42.5% on TVGBench, demonstrating robust temporal reasoning ability.

Visualization

Spatial Temporal Video Grounding

Description: The woman in yellow clothes puts her phone down, stands up, and turns around and walks in front of the man in white.

Output:

<think>

To determine the event "The woman in yellow clothes puts her phone down, stands up, and turns around and walks in front of the man in white," I need to analyze the sequence of actions:

1. The woman in yellow clothes (Object ID 1) is initially seated and appears to be holding a phone.

2. She then puts the phone down on the desk.

3. She stands up from her seat.

4. She turns around and walks in front of the man in white (Object ID 2).

Based on this sequence, the event likely occurs between the moment she puts the phone down and the moment she starts walking in front of the man in white.

Temporal Analysis:

- The woman puts her phone down around 0.50 seconds.

- She stands up around 1.00 seconds.

- She turns around and walks in front of the man in white around 3.50 seconds.

Therefore, the event occurs between 1.00 and 5.00 seconds.

</think>

<answer> Target ID: 1, Time range: 1.00 to 5.00 </answer>

Description: The boy to the left of the woman stands up and turns.

Output:

<think> To determine the event "The boy to the left of the woman stands up and turns," I need to identify the boy who is positioned to the left of the woman. In this scene, the boy wearing a striped shirt is seated next to the woman. As the video progresses, this boy stands up and begins to turn his body away from the table. The action happens around the 15-second mark. </think>

<answer> Target ID: 3, Time range: 15.00 to 19.99 </answer>

Description: The blue man walks a few steps forward as he speaks, and then sits down.

Output:

<think> To determine the event "The blue man walks a few steps forward as he speaks, and then sits down," I need to identify the blue man first. The blue man is wearing a blue robe and a black hat. In the video, the blue man (Object ID 4) starts by standing still and then begins to walk forward while speaking. After walking a few steps, he stops and sits down on the ground. This sequence happens between 10.00 and 16.99 seconds. </think>

<answer> Target ID: 4, Time range: 10.00 to 16.99 </answer>

Description: The man in red clothes says a few words to the man in a white shirt and walks to the man without a hat.

Output:

<think> To determine the event "The man in red clothes says a few words to the man in a white shirt and walks to the man without a hat," I need to identify the individuals involved and analyze their actions.

1. The man in red clothes is labeled as Object ID 5. 2. The man in a white shirt is labeled as Object ID 1. 3. The man without a hat is labeled as Object ID 3. The sequence of events can be observed as follows: - Object ID 5 approaches Object ID 1. - Object ID 5 appears to be speaking to Object ID 1. - Object ID 5 then moves towards Object ID 3. Based on this observation, the event occurs between approximately 6.90 and 14.90 seconds. </think><answer> Target ID: 5, Time range: 6.90 to 14.90 </answer>

Multi-Object Referring Video Object Segmentation

Description: Except for the two bears in front, all other bears in the distance walking.

Output:

<think> To identify the event 'Except for the two bears in front, all other bears in the distance walking', I need to focus on the bears that are further away from the camera. In this sequence, there are five bears visible, and they appear to be moving across the grassy field. The two bears in the foreground are labeled as 2 and 4, while the others are labeled as 1, 3, and 5. The bears labeled as 1, 3, and 5 are positioned further back in the scene and seem to be walking away from the camera. </think>

<answer> Target ID: 1, 3, 5 </answer>

Description: two moving cats

Output:

<think> To identify the event 'two moving cats', I need to analyze the movement of the cats in the video. The cats labeled as 2 and 3 appear to be the ones that are moving. Cat 2 is standing up and seems to be walking away from the camera, while cat 3 is also moving but it's not as clear if it's walking or just shifting position. Cat 1 remains stationary throughout the video. </think>

<answer> Target ID: 2, 3 </answer>

Description: front elephant walking to backwards

Output:

<think> To determine the event 'front elephant walking to backwards', I need to observe the movement of the elephants. In the video, the front elephant (ID 1) starts facing forward and then turns around to walk backward. This transition happens gradually over a few seconds. </think>

<answer> Target ID: 1 </answer>

Description: Dogs moving around

Output:

<think> The event 'Dogs moving around' seems to occur throughout the video, as the dogs are continuously interacting with each other. The black dog (ID 1) and the white dog (ID 3) are seen moving around and playing with each other. The yellow bag (ID 2) remains stationary, and the other objects (ID 4 and ID 5) do not seem to be involved in the movement. </think>

<answer> Target ID: 1, 3 </answer>

BibTeX

@misc{zhang2025chain,

title={Chain-of-Focus: Adaptive Visual Search and Zooming for Multimodal Reasoning via RL},

author={Xintong Zhang and Zhi Gao and Bofei Zhang and Pengxiang Li and Xiaowen Zhang and Yang Liu and Tao Yuan and Yuwei Wu and Yunde Jia and Song-Chun Zhu and Qing Li},

year={2025},

eprint={2505.15436},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.15436},

}